The lens on your camera magnifies any slight vibrations in your hands, resulting in blurry images. As well as digital stabilization, there are now two mechanical solutions – in-lens optical stabilization with moving lens elements, or on-chip sensor stabilization.

Both methods are technically ‘optical’ (as opposed to digital), and on-chip sensor stabilization is the clear winner in every case except film SLRs. On DSLRs and mirrorless cameras, one new body brings stabilization to all your lenses. Phones have an image sensor for each built-in lens, but on-chip stabilization means the phone can have better lenses.

The camera manufacturers seem to agree, too, Sensor stabilization built into camera bodies has become widespread in the new generation of mirrorless cameras, though traditionally Nikon and Canon added it to their lenses. Even high-end phones haven’t quite taken the plunge yet though. Some of the industry rumour sites (cough Digitimes cough) are excitedly suggesting that future versions of the iPhone will see this technology, and it’s easy to imagine.

A good example is the iPhone 11 Pro, which has optical image stabilization in it’s standard and tele (2x) lenses, but not on it’s wide (0.5x) camera. With on-chip stabilization on every camera then every zoom length would have a stabilized image, and there would be scope to improve the optics for the 1x and 2x lens components since the stabilization would be handled without any moving lens elements. Admittedly that’s no guarantee that Apple would use a stabilized chip on each camera, but surely Apple and their ilk wouldn’t mind the bulk discount on components?

(A quick aside: Much may depend on how Samsung’s heavy marketing push on megapixel numbers goes, since their flagship Galaxy S20 Ultra has been launched with a mix of sensors across its assorted cameras).

How are the systems compared?

Comparing these systems does suppose that the stabilization they provide is of equal standard. Is it? And how is that measured?

The industry body CIPA does have a system used by the big firms (CIPA approved measuring method) which is given in ‘stops,’ a term familiar to most photographers once they’ve ventured past ‘Auto’ mode. It measures the number of stops you can keep the shutter open longer than you otherwise would and expect the same level of sharpness. For example, if you got a sharp image at 1/125 sec, then a stabilization that was rated 1-stop would mean you’d get an equally sharp shot at 1/60th (the shutter open roughly twice as long).

Canon achieves 4 (and in some cases) 5 stops worth of image stabilisation on their lenses with built-in Image Stabilzation. Some Nikon lenses have an equivalent technology called VR (Vibration Reduction). In both cases, some lenses offering this feature came out before the switch to digital was complete, and the tech is equally useful on a film SLR as a digital one.

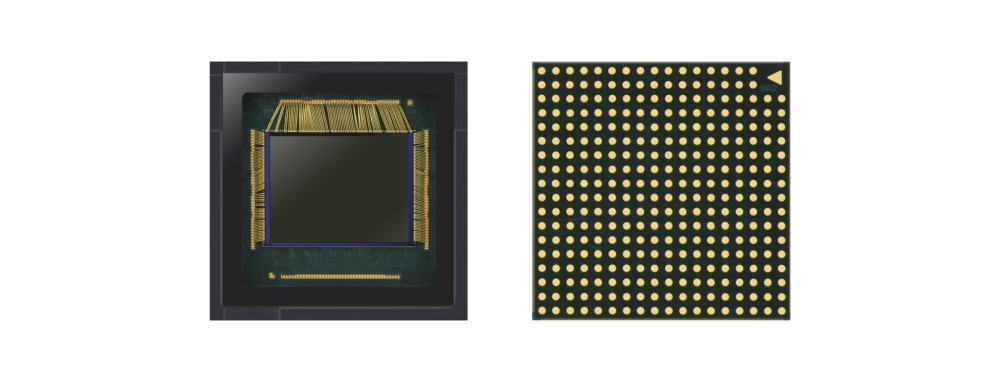

Sony introduced 5-axis image stabilization on the A7ii, which claims 4-5 stops of stabilization too, so assuming the same technical approach (a sensor which can not only move horizontally and vertically, but also pitch, roll and yaw) the same should be possible on a smaller phone-sized chip.

New Possibilities Sensor Stabilization Opens Up

On-sensor stabilization is not only an exciting prospect for making life easier optically, even in the phone world, but the camera world is also finding some really interesting additional benefits. Given how much more processing power there is in a phone, and the creativity of the app market, this can only be the tip of the iceberg.

Sensor Stabilization can Boost Megapixels

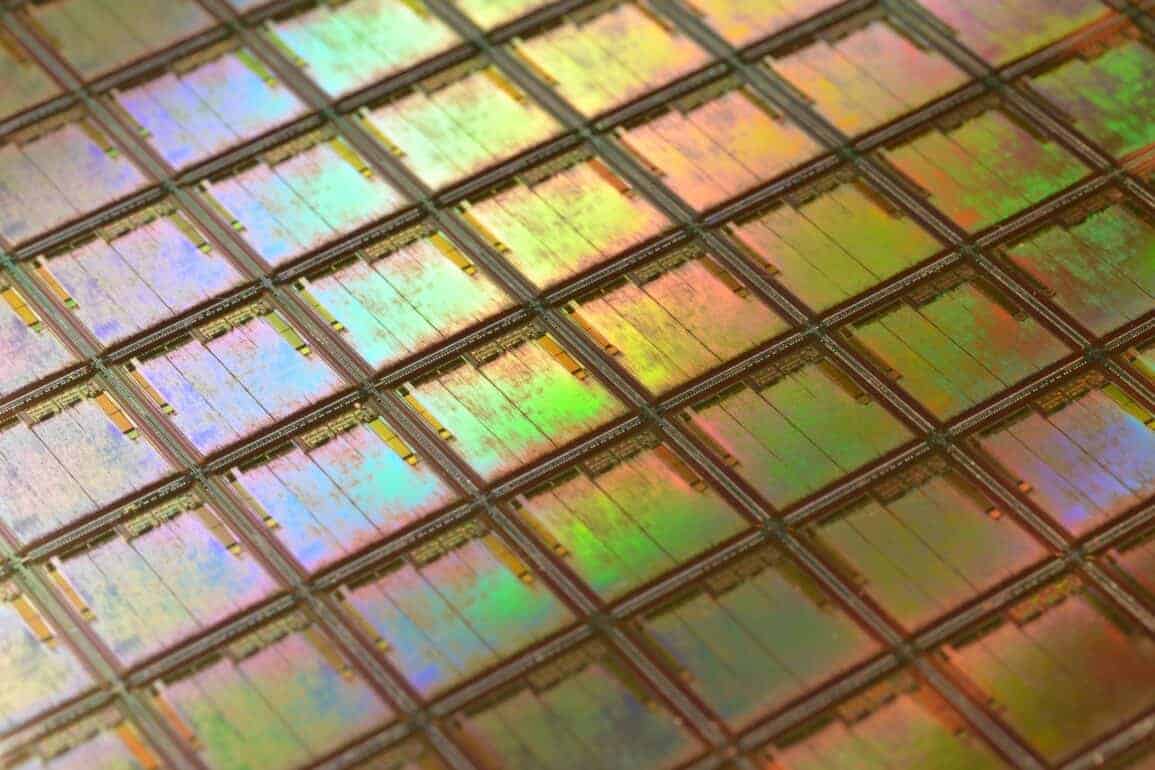

It might sound a bit crazy at first, after all a sensor chip is a physical component and it will always have the same number of photosites (the individual pixels). In fact, though, the sensor stabilization mechanism means that it can be moved a tiny amount while a series of frames are shot. The resulting images can then be composited in software which, for example, the Olympus OM-D E-M5 MkII does. The result is 40-megapixel images from a 16-megapixel sensor and, believe it or not, it actually works, so long as you’re working on a tripod and your subject isn’t moving.

Sensor Stabilization unlocks Smart features

Nocturnal landscape photographers who happen to have the Pentax K-1 or K-3ii will testify to the brilliance of the AstroTracer feature. Traditionally when shooting a nighttime landscape, you’d leave the camera fixed on the tripod and the stars would appear to move and leave trails (or, if you are of a more medieval persuasion, the stars actually move around you). The Pentax camera introduced a system whereby the camera could make use of on-board GPS and compass to automatically orientate the sensor to the stars during a long exposure. Before these cameras came along, the only alternative was mounting your camera on a tracking device.

There is no technical reason why Sony’s 5-axis system couldn’t be used for the same thing, if GPS and compass data were available. In phones all those technologies are already available – you’d just need to ensure no one called you while you were shooting and you’d need a good phone-tripod grip.

Digital Stabilization v On-Chip Stabilization

These are not the same thing, even though we think of chips and digital as being distinctly related. When we call a process ‘digital,’ we mean that the computer is doing the work by processing the data. It’s very likely that your phone does this when shooting video, by cropping in a little on each frame, then comparing them all as they come in, then cropping them slightly differently so there appears to be less camera shake. Some digital methods can also be used to sharpen images by taking multiple frames at a higher shutter speed and combining them, for example.

Digital methods rely on the limits of the sensor in question, though (and any vibration it is exposed to). Optical methods compensate by making sure the photons of light which make up the image arrive in the right place on the image sensor chip, so tend to produce better results (but are more mechanically complicated and more expensive to implement).

Camera salespeople are well aware of the advantages of optical technology, which is why they’ll use confusing terms when they’re trying to avoid explaining a technology is digital, just as they do when they don’t want to say “digital zoom” (also a lesser technology). Ask yourself, what does this mean: “48-megapixel telephoto camera allowing 10x Hybrid Optic Zoom and ‘Super Resolution Zoom’ that uses AI for up to 100x zoom”? In truth the only fact is the 48-megapixels. The addition of the word ‘Hybrid’ implies that the 10x is going to be achieved at least in part digitally (using data from other lenses?) and AI is a more open admission of digital involvement.

Related listening

Hear Dan and I talk about phones v cameras in Episode 8.